Are we inching towards dystopia one sumo cat at a time?

How far have text-to-image generative algorithms progressed? What does this mean for society and businesses? In this post, we review DALL-E 2 and Imagen, which have received a lot of publicity in recent weeks, and briefly discuss how algorithms can – counterintuitively – positively enhance data privacy of individuals.

Google Imagen and OpenAI Dall-E 2 text-to-image algorithms

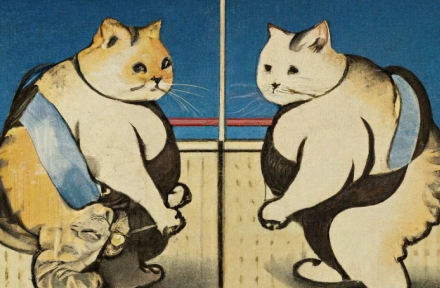

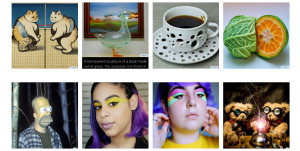

Images of imagined fruits, cats doing sumo and beautiful human faces created using OpenAI and Google's text-to-image algorithms DALL-E 2 and Imagen, have received a lot of publicity on social platforms. Text-to-image technology is not new, but it has reached a level recently that has caused existential angst among a large number of logo and graphic designers as well as lawyers specialized on patents. The most unpredictable and often most costly part of content production is the creative minds themselves, who, unlike software, cannot be switched on and off at command or pushed to create a fine quality work instantly. Text-to-image and text-to-video models change this: the user inputs a description of an image or video and algorithm generates various version, from which we "only" have to choose the best one.

The first row shows the images produced by Google Imagen, and below them the Open AI DALL-E 2 products, which were created using text-only commands (for the commands and sources see references at the end of article).

What is wrong with large AI models and why the problem is becoming more severe overtime?

The field of artificial intelligence is currently dominated by deep learning methods. A peculiarity of deep learning applications is that they work like Feuerbach's famous saying "You are what you eat". Deep learning algorithms learn on huge datasets and the accuracy of a given model depends largely on the quality of the dataset. If the dataset is biased in some way (e.g. it contains only old men instead of a variety of all ages) or mislabeled (it contains airplanes labeled as chairs), the resulting model will reflect these. This is particularly dangerous in models which are used for media content generation, because a poorly functioning model can massively reinforce racial, religious, gender stereotypes, or malfunction in other ways. Both OpenAI and Google are aware of the risk, so their most advanced algorithms are currently sold and used only under the supervision of the company.

The privacy paradox of audiovisual content generator: what you lose on the swings you gain on the roundabouts

Although algorithmic mass production of audiovisual content promises a dire future in virtually every other respect, it could - counterintuitively - enhance the protection of individuals' privacy. Audiovisual types of personal data is valuable precisely because there is a scarcity of such data. We share pictures and videos with our friends because we want to show them memories of unique events that happened to us. Companies publish pictures of their events to build trust in their partners and future employees. Now, the choice for audiovisual content is binary: take the risk of publishing personal data or not. Soon, however, models such as DALL-E and Imagen may allow us to decide whether to publish a version of our photos and videos that is almost identical in all aspects to the original, but that does not include the same person as us. This way published content will hold our essence but offer us plausible deniability as a strong protector of our privacy.

Source of the pictures:

Google Imagen

- @hardmaru, Twitter: Ukiyo-e painting of two cats in a Sumo match at the National Sumo Stadium in Ryogoku, Japan

- Google: A transparent sculpture of a duck made out of glass.

- @irinablok, Twitter: Coffee cup with many holes.

- @irinablok, Twitter: One piece of fruit that's cabbage on the outside, orange texture on the inside, cut in half.

OpenAI DALL-E 2

- @Dalle2pics, Twitter: A still of Homer Simpson in The Blair Witch Project.

- @djbaskin_images, Twitter: Two human faces with makeup.

- @djbaskin_images, Twitter: Two human faces with makeup.

- OpenAI: Teddy bears mixing sparkling chemicals as mad scientist in steampunk style.

(Feautured image: @hardmaru / Twitter)

Date: 14. July 2022 | Topic: BusinessBusiness

The above summary is provided for information purposes only. We recommend that you consult our experts before making any decision based on this information.

Nexia International is a network combining the expertise and experience of nearly 320 independent tax consulting and audit firms from over 100 countries worldwide and is ranked as the 10th largest such network in the world.